这里应该有两部分,一部分是,host如何进入guest,进入的时候保存了什么,加载了什么,怎么进行的切换,第二部分是guest在运行的时候,什么情况下会进行退出,切换到host,这个时候又需要保存什么,恢复什么

关于指令的运行, 我理解的如果不是敏感指令,在CPU转为guest状态后,就在物理CPU上执行虚拟机的指令,此时像内存,页表,各种寄存器等也都切换成虚拟机的了,所以就相当于一台真正的机器在运行,不会受到什么影响,只是当遇到敏感指令时,就需要vmexit退出进行特殊处理了

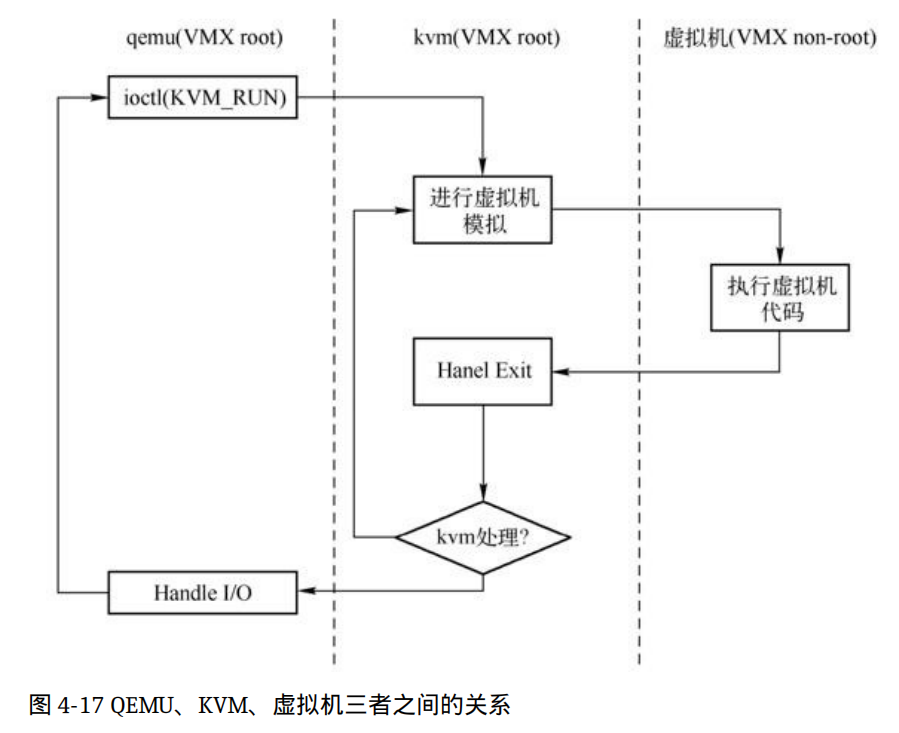

host进入guest | 开始运行虚拟机指令 QEMU中VCPU线程函数为qemu_kvm_cpu_thread_fn(cpus.c),该函数内部有一个循环,执行虚拟机代码,先用cpu_can_run判断是否可以运行,可以的话,进入VCPU执行的核心函数kvm_cpu_exec

VCPU执行的核心函数 kvm_cpu_exec 核心是一个 do while循环,会用kvm_vcpu_ioctl(cpu,KVM_RUN,0)使CPU运行起来, 如果遇到VM Exit,需要qemu处理的话,会返回到这里,让QEMU进行处理.

ioctl(KVM_RUN)是KVM进行处理的,它对应的处理函数是kvm_arch_vcpu_ioctl_run,该函数主要调用vcpu_run

kvm/x86.c

1 2 3 4 5 6 int kvm_arch_vcpu_ioctl_run (struct kvm_vcpu *vcpu, struct kvm_run *kvm_run) { ..... r = vcpu_run(vcpu); ... }

vcpu_run kvm/x86.c

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 static int vcpu_run (struct kvm_vcpu *vcpu) { int r; struct kvm *kvm = vcpu->srcu_idx = srcu_read_lock(&kvm->srcu); for (;;) { if (kvm_vcpu_running(vcpu)) { r = vcpu_enter_guest(vcpu); } else { r = vcpu_block(kvm, vcpu); } if (r <= 0 ) break ; ................ kvm_check_async_pf_completion(vcpu); ........ }

vcpu_run的函数的主体结构也是一个循环,首先调用kvm_vcpu_running判断当前CPU是否可运行

如果判断是可运行的,则会调用vcpu_enter_guest来进入虚拟机

vcpu_enter_guest kvm/x86.c

在最开始会对vcpu->requests上的请求进行处理,这些请求可能来自多个地方,比如在处理VM Exit时,KVM在运行时需要修改虚拟机状态时等,这些请求都在即将进入guest的时候进行处理

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 static int vcpu_enter_guest (struct kvm_vcpu *vcpu) { int r; bool req_int_win = dm_request_for_irq_injection(vcpu) && kvm_cpu_accept_dm_intr(vcpu); bool req_immediate_exit = false ; if (vcpu->requests) { if (kvm_check_request(KVM_REQ_MMU_RELOAD, vcpu)) kvm_mmu_unload(vcpu); ............. }

接下来会处理虚拟终端相关请求,然后调用kvm_mmu_reload,与内存设置相关

1 2 3 4 5 if (kvm_check_request(KVM_REQ_EVENT, vcpu) || req_int_win) { kvm_apic_accept_events(vcpu); ............ r = kvm_mmu_reload(vcpu);

然后设置禁止抢占,之后调用回调函数prepare_guest_switch,vmx对应的函数是vmx_save_host_state , 从名称就可以推测,是准备要进入guest了,此时需要保存host的状态.

1 2 3 preempt_disable(); kvm_x86_ops->prepare_guest_switch(vcpu);

vmx_save_host_state(保存host的信息) 能够看到这个函数里面有很多savesegment和vmcs_write的操作,用来保存host的状态信息。

vmx_vcpu_run(进入guest模式) 紧接着的函数是vmx的run回调,对应的函数时vmx_vcpu_run

x86.c

该函数首先根据VCPU的状态写一些VMCS的值,然后执行汇编ASM_VMX_VMLAUNCH将CPU置于guest模式,这个时候CPU就开始执行虚拟机的代码

vmx.c

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 static void __noclone vmx_vcpu_run (struct kvm_vcpu *vcpu) { ........ vmx->__launched = vmx->loaded_vmcs->launched; asm ( "push %%" _ASM_DX "; push %%" _ASM_BP ";" ..... ...... "jne 1f \n\t" __ex(ASM_VMX_VMLAUNCH) "\n\t" "jmp 2f \n\t" "1: " __ex(ASM_VMX_VMRESUME) "\n\t"

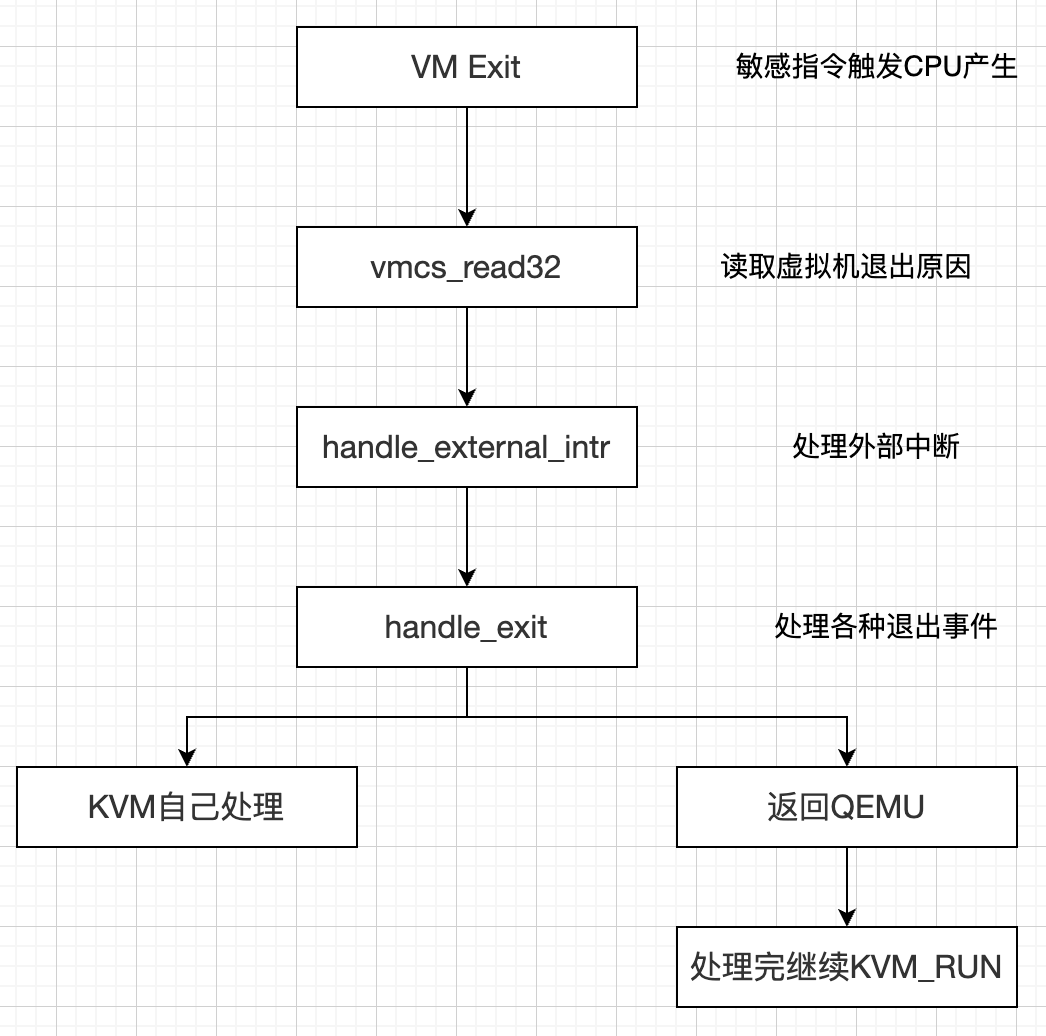

guest进入host | 回到宿主机进行处理 VM exit 退出 在kvm的vmx_vcpu_run函数里面执行了ASM_VMX_VMLAUNCH,将CPU置于guest模式,开始运行虚拟机的代码,当后面遇到敏感指令的时候,CPU产生VMExit,此时KVM接管CPU,就会跳到下一行代码,jmp 2f,也就是跳到标号2的地方,看注释很明显,保存guest的寄存器,恢复host的,要进行切换( 不过目前也不完全是这样,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 static void __noclone vmx_vcpu_run (struct kvm_vcpu *vcpu) { ........ vmx->__launched = vmx->loaded_vmcs->launched; asm ( "push %%" _ASM_DX "; push %%" _ASM_BP ";" ..... ...... "jne 1f \n\t" __ex(ASM_VMX_VMLAUNCH) "\n\t" "jmp 2f \n\t" "1: " __ex(ASM_VMX_VMRESUME) "\n\t" "2: " "mov %0, %c[wordsize](%%" _ASM_SP ") \n\t" "pop %0 \n\t" "setbe %c[fail](%0)\n\t"

调用vmcs_read32读取虚拟机退出的原因,保存在vcpu_vmx结构体的exit_reason成员中

1 2 3 4 5 6 7 8 static void __noclone vmx_vcpu_run (struct kvm_vcpu *vcpu) { ........ vmx->exit_reason = vmcs_read32(VM_EXIT_REASON); .... vmx_complete_atomic_exit(vmx); vmx_recover_nmi_blocking(vmx); vmx_complete_interrupts(vmx);

最后调用3个函数对本次退出进行预处理

回到vcpu_enter_guest进行退出的详细处理 当vmx_vcpu_run运行结束,回到vcpu_enter_guest函数,

x86.c

1 2 3 4 5 6 7 8 9 10 static int vcpu_enter_guest (struct kvm_vcpu *vcpu) { .... kvm_x86_ops->run(vcpu); .... kvm_x86_ops->handle_external_intr(vcpu); .... r = kvm_x86_ops->handle_exit(vcpu); return r;

虚拟机退出之后会调用vmx实现的handle_external_intr回调来处理外部中断,并调用handle_exit回调来处理各种退出事件

vmx_handle_external_intr handle_external_intr 对应vmx_handle_external_intr

读取中断信息,判断是否是有效的中断,如果是,读取中断号vector,然后得到宿主机中对应IDT的中断门描述符,最后一段汇编用来执行处理函数,vmx_handle_external_intr会开启中断

也就是说,CPU在guest模式运行时,中断是关闭的,运行着虚拟机代码的CPU不会接收到外部中断,但是外部中断会导致CPU退出guest模式,进入VMX root模式

vmx.c

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 static void vmx_handle_external_intr (struct kvm_vcpu *vcpu) { u32 exit_intr_info = vmcs_read32(VM_EXIT_INTR_INFO); if ((exit_intr_info & (INTR_INFO_VALID_MASK | INTR_INFO_INTR_TYPE_MASK)) == (INTR_INFO_VALID_MASK | INTR_TYPE_EXT_INTR)) { unsigned int vector ; unsigned long entry; gate_desc *desc; struct vcpu_vmx *vmx = #ifdef CONFIG_X86_64 unsigned long tmp; #endif vector = exit_intr_info & INTR_INFO_VECTOR_MASK; desc = (gate_desc *)vmx->host_idt_base + vector ; entry = gate_offset(*desc); asm volatile ( #ifdef CONFIG_X86_64 "mov %%" _ASM_SP ", %[sp]\n\t" "and $0xfffffffffffffff0, %%" _ASM_SP "\n\t" "push $%c[ss]\n\t" "push %[sp]\n\t" #endif "pushf\n\t" "orl $0x200, (%%" _ASM_SP ")\n\t" __ASM_SIZE(push) " $%c[cs]\n\t" CALL_NOSPEC : #ifdef CONFIG_X86_64 [sp]"=&r" (tmp) #endif : THUNK_TARGET(entry), [ss]"i" (__KERNEL_DS), [cs]"i" (__KERNEL_CS) ) ; } else local_irq_enable(); }

如果不是呢????调用local_irq_enable();

vm_handle_exit 执行完vmx_handle_external_intr后继续执行vcpu_enter_guest(x86.c)

1 2 3 4 5 6 7 8 9 10 static int vcpu_enter_guest (struct kvm_vcpu *vcpu) { .... kvm_x86_ops->run(vcpu); .... kvm_x86_ops->handle_external_intr(vcpu); .... r = kvm_x86_ops->handle_exit(vcpu); return r;

从上面可知,外部中断的处理时在handle_exit之前进行的,所以在后面handle_exit中处理外部中断的时候就没什么太多要做的了。

handle_exit 对应 vmx_handle_exit 函数,它是退出事件总的分发处理函数,在对一些特殊情况进行判断之后根据突出原因调用了kvm_vmx_exit_handlers中定义的相应的分发函数

vmx.c

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 static int vmx_handle_exit (struct kvm_vcpu *vcpu) { struct vcpu_vmx *vmx = u32 exit_reason = vmx->exit_reason; u32 vectoring_info = vmx->idt_vectoring_info; ................... if (exit_reason < kvm_vmx_max_exit_handlers && kvm_vmx_exit_handlers[exit_reason]) return kvm_vmx_exit_handlers[exit_reason](vcpu); else { WARN_ONCE(1 , "vmx: unexpected exit reason 0x%x\n" , exit_reason); kvm_queue_exception(vcpu, UD_VECTOR); return 1 ; }

可以看到一个关键的地方, 传入退出的原因,然后进行选择处理函数

1 2 && kvm_vmx_exit_handlers[exit_reason]) return kvm_vmx_exit_handlers[exit_reason](vcpu);

kvm_vmx_exit_handlers中的EXIT_REASON_XXXX宏定义了退出的原因,对应的handle_xxx则定义了相应的处理函数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 static int (*const kvm_vmx_exit_handlers[]) (struct kvm_vcpu *vcpu) = { [EXIT_REASON_EXCEPTION_NMI] = handle_exception, [EXIT_REASON_EXTERNAL_INTERRUPT] = handle_external_interrupt, [EXIT_REASON_TRIPLE_FAULT] = handle_triple_fault, [EXIT_REASON_NMI_WINDOW] = handle_nmi_window, [EXIT_REASON_IO_INSTRUCTION] = handle_io, [EXIT_REASON_CR_ACCESS] = handle_cr, [EXIT_REASON_DR_ACCESS] = handle_dr, [EXIT_REASON_CPUID] = handle_cpuid, [EXIT_REASON_MSR_READ] = handle_rdmsr, [EXIT_REASON_MSR_WRITE] = handle_wrmsr, [EXIT_REASON_PENDING_INTERRUPT] = handle_interrupt_window, [EXIT_REASON_HLT] = handle_halt, [EXIT_REASON_INVD] = handle_invd, [EXIT_REASON_INVLPG] = handle_invlpg, [EXIT_REASON_RDPMC] = handle_rdpmc, [EXIT_REASON_VMCALL] = handle_vmcall, [EXIT_REASON_VMCLEAR] = handle_vmclear, [EXIT_REASON_VMLAUNCH] = handle_vmlaunch, [EXIT_REASON_VMPTRLD] = handle_vmptrld, [EXIT_REASON_VMPTRST] = handle_vmptrst, [EXIT_REASON_VMREAD] = handle_vmread, [EXIT_REASON_VMRESUME] = handle_vmresume, [EXIT_REASON_VMWRITE] = handle_vmwrite, [EXIT_REASON_VMOFF] = handle_vmoff, [EXIT_REASON_VMON] = handle_vmon, [EXIT_REASON_TPR_BELOW_THRESHOLD] = handle_tpr_below_threshold, [EXIT_REASON_APIC_ACCESS] = handle_apic_access, [EXIT_REASON_APIC_WRITE] = handle_apic_write, [EXIT_REASON_EOI_INDUCED] = handle_apic_eoi_induced, [EXIT_REASON_WBINVD] = handle_wbinvd, [EXIT_REASON_XSETBV] = handle_xsetbv, [EXIT_REASON_TASK_SWITCH] = handle_task_switch, [EXIT_REASON_MCE_DURING_VMENTRY] = handle_machine_check, [EXIT_REASON_EPT_VIOLATION] = handle_ept_violation, [EXIT_REASON_EPT_MISCONFIG] = handle_ept_misconfig, [EXIT_REASON_PAUSE_INSTRUCTION] = handle_pause, [EXIT_REASON_MWAIT_INSTRUCTION] = handle_mwait, [EXIT_REASON_MONITOR_TRAP_FLAG] = handle_monitor_trap, [EXIT_REASON_MONITOR_INSTRUCTION] = handle_monitor, [EXIT_REASON_INVEPT] = handle_invept, [EXIT_REASON_INVVPID] = handle_invvpid, [EXIT_REASON_XSAVES] = handle_xsaves, [EXIT_REASON_XRSTORS] = handle_xrstors, [EXIT_REASON_PML_FULL] = handle_pml_full, [EXIT_REASON_PCOMMIT] = handle_pcommit, };

对应的处理函数怎么找呢??? 在哪里呢? 搜了一下,搜的几个都还是在这个vmx.c文件里

有的退出事件KVM能够自己处理,这个时候就直接处理然后返回,准备下一轮的VCPU运行,如果KVM无法处理,则需要将事件分发到QEMU进行处理

自己处理的例子: handle_cpuid 看代码,它的原理是查询之前QEMU的设置,然后直接返回,只需要通过KVM就可以完成. 返回1,这个值也作为vcpu_enter_guest的返回值, 为1表示不需要让虚拟机回到QEMU

1 2 3 4 5 static int handle_cpuid (struct kvm_vcpu *vcpu) { kvm_emulate_cpuid(vcpu); return 1 ; }

需要返回QEMU处理的例子 handle_io 对该函数进行一路追踪,能看到最后返回了0,所以需要返回QEMU进行处理

1 2 3 4 5 6 static int handle_io (struct kvm_vcpu *vcpu) { ..... return kvm_fast_pio_out(vcpu, size, port); }

返回退出的代码如下, r==0的话会进入break,导致该函数退出 for循环,进而使得ioctl返回用户态

1 2 3 4 5 6 7 8 9 10 11 12 static int vcpu_run (struct kvm_vcpu *vcpu) { ...... for (;;) { if (kvm_vcpu_running(vcpu)) { r = vcpu_enter_guest(vcpu); } else { r = vcpu_block(kvm, vcpu); } if (r <= 0 ) break ;

也就是返回到了kvm_arch_vcpu_ioctl_run,再进行返回,就到了QEMU里面了.

在QEMU里面处理完之后再次通过host进入guest的流程,